Shadow AI in the Workplace: The Hidden Security and Compliance Risks (And How To Avoid Them)

When employees input sensitive data into unauthorized AI tools, also known as shadow AI, it creates security risks, compliance gaps, and unreliable outputs. A study found that 80% of IT security professionals use AI tools without company approval.

As AI adoption grows, workplaces need to strengthen governance, secure IT infrastructure, and adopt clear policies to limit the use of shadow AI.

This article explores what Shadow AI is, its risks, and effective strategies to manage its impact in the workplace.

What is Shadow AI

Shadow AI refers to employees using AI tools without IT approval.

These tools operate outside company security controls, making it impossible for IT teams to track, secure, or regulate their use.

Employees often rely on shadow AI apps to complete tasks faster, especially in high-pressure environments. A common example is generative AI, where employees upload confidential reports, financial data, or customer information into tools like ChatGPT to generate content.

However, these platforms store and process data externally, meaning businesses lose control over sensitive information, increasing the risk of leaks, unauthorized access, and regulatory fines.

Shadow IT versus Shadow AI: The Difference

Shadow IT refers to the unapproved use of software, hardware, or cloud services by employees without the approval of the IT department.

Shadow AI is a subset of Shadow IT that involves AI tools capable of storing, processing, and learning from company data.

Both shadow IT and shadow AI require governance, but AI tools pose greater data security and compliance risks.

Why do Employees Use Shadow AI?

Here’s why employees use shadow AI for workplace-related tasks:

Lack of AI Governance Frameworks

A study found that the lack of AI governance frameworks leaves employees without access to secure, organization-approved AI tools. When businesses fail to integrate AI solutions into daily workflows, employees adopt third-party AI applications to automate repetitive tasks, generate reports, or analyze data.

Accessibility of AI Tools

With AI applications like ChatGPT readily available, employees can easily integrate them into their work without IT approval. These tools provide instant solutions but operate outside IT security controls.

Pressure for Productivity

Employees use AI tools to meet tight deadlines or high workloads. It helps them speed up tasks and meet performance expectations. Whether it’s generating reports or processing large datasets, AI can reduce manual effort.

IT Restrictions

Traditional IT infrastructures are slow, complex, or restrictive for AI applications. This makes employees feel frustrated with outdated systems. Rather than waiting for approvals or struggling with limited internal tools, they adopt AI-driven solutions that provide immediate results.

Lack of Awareness

Many assume that widely used AI tools are safe, without realizing that these platforms store, process, and potentially expose sensitive company data. This misunderstanding increases the risk of data leaks, compliance violations, and unauthorized information sharing.

The Risks of Shadow AI

Here’s what happens when your employees use shadow AI:

Data Privacy Concerns

Employees using shadow AI often feed them with sensitive corporate data, leading to confidentiality risks. Without proper monitoring, you can’t track where or how AI tools store and process this data.

Regulatory and Compliance Violations

When using shadow AI, you can’t control how data is processed, stored, and shared. This leads to non-compliance with regulators like GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and SEC (Securities and Exchange Commission).

For example, shadow AI apps in healthcare and education can expose protected health information (PHI) and student records, violating HIPAA and FERPA (Family Educational Rights and Privacy Act).

You may face lawsuits, fines, and reputational damage if your AI systems lack proper security controls, data retention policies, or encryption.

Financial Fraud and Market Manipulation

Studies show shadow AI tools increase risks of identity fraud, insider trading, and pump-and-dump schemes. These tools can generate deepfake identities, fake documents, and AI-generated phishing emails, making it easier to bypass security checks and commit financial fraud.

Did you know? In 2023, Southeast Asian cybercriminals exploited AI technologies to steal up to $37 billion through various forms of scams.

Algorithmic Bias

Shadow AI can manipulate decision-making and expose you to legal and reputational harm. Since Shadow AI tools operate without oversight, employees may unknowingly use biased AI models for hiring, promotions, performance evaluations, and customer interactions.

For example, Amazon discontinued an AI recruitment tool after discovering it favored male applicants over female ones. The system was trained on resumes submitted over a ten-year period, which were predominantly from men.

Cybersecurity Threats

Cybercriminals can bypass and exploit vulnerabilities of shadow AI to gain access to or hack IT systems. When employees input confidential information into shadow AI tools, the data may be stored in unsecured environments without encryption or access controls.

Cybercriminals can intercept, extract, or manipulate this data. They can even alter AI outputs, gain unauthorized access, or launch broader cyberattacks.

Common Shadow AI Examples in the Workplace

Here are some common shadow AI examples used in the workplace:

- AI Transcription tools: Employees use tools like Otter.ai and Fireflies to transcribe meetings, risking unauthorized storage of sensitive conversations.

- AI generators: AI models like ChatGPT create text, images, and code but lack data privacy controls, leading to potential leaks of proprietary information.

- AI chat assistants: Customer service teams use shadow AI chatbots to handle inquiries or automate responses.

- AI Browsers & extensions: Shadow AI browser plugins analyze or generate content but can expose user activity and confidential data.

- AI proxies: Employees use shadow AI proxies to bypass network restrictions, creating security vulnerabilities and unauthorized data access risks.

- AI-embedded hardware: Unauthorized AI-powered devices process data locally. But without IT oversight, they introduce security gaps and data privacy risks in workplace networks.

Strategies to Mitigate Shadow AI Risks

Here’s how you can mitigate shadow AI risks:

Establishing Clear AI Policies

You need to establish company-wide AI policies that define which AI tools employees can use, how they should handle sensitive data, and the consequences of using unauthorized AI. This helps you regulate AI deployment and usage.

Each workplace has different needs and workflows. Conduct regular compliance audits and risk assessments to detect common shadow AI tools in use and create policies accordingly.

Use Monitoring Systems

Use AI detection and tracking tools to identify, analyze, and control the use of shadow AI apps. These systems can track data flow, flag unusual AI activity, and alert IT teams when employees use unapproved AI tools.

Monitoring AI interactions can help you detect security threats, enforce compliance policies, and prevent data leaks before they cause harm.

Train Your Employees

Educate your staff about the risks, best practices, and responsible use of AI tools in the workplace. You can provide modules, regular workshops, AI literacy sessions, and cybersecurity awareness training to help employees recognize and use fewer shadow AI tools. This will encourage them to use approved AI tools safely.

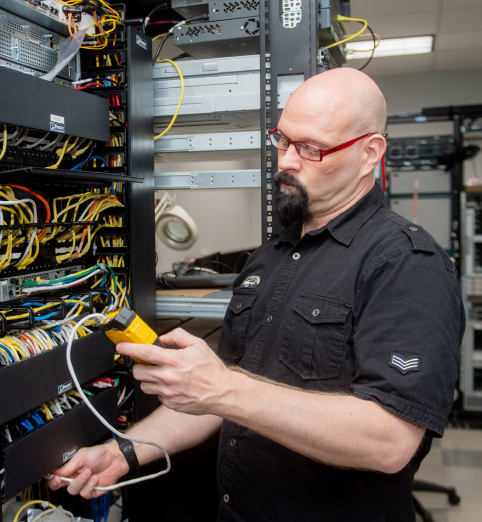

Update Your IT Infrastructure

Outdated systems are vulnerable to cyberattacks due to outdated encryption, weak access controls, and unpatched vulnerabilities. Update your IT infrastructure by integrating AI-powered threat detection, optical networking for faster data transmission, access controls, and behavior-based anomaly detection to prevent shadow AI use.

To protect sensitive data, upgrade to AES-256 encryption for data confidentiality and deploy AI-driven network monitoring tools to detect Shadow AI activity.

You can adopt a cloud-based AI governance system with secured APIs and controlled AI sandboxes so employees have fast, compliant, and enterprise-approved AI alternatives.

Invest in AI-Compatible Hardware

Buy high-performance computing systems (HPC) such as GPUs (like NVIDIA A100), TPUs, and edge devices. These HPC systems can handle AI workloads, process data faster, train AI models, and help you establish better security controls.

By providing employees with secure, company-approved AI resources, you can reduce the risk of shadow AI tools. This also helps you protect sensitive data while allowing faster, smarter decision-making.

Final Thoughts

Shadow AI can compromise workplace security, compliance, and data integrity when it’s misused or overlooked. When employees use unapproved AI tools, it increases your business’ exposure to cyber threats, regulatory penalties, and operational disruptions.

To address this, you need a structured AI governance strategy, which includes clear policies, monitoring tools, employee training, and secure IT infrastructure. By strengthening security measures and providing approved AI tools, you can reduce the risks of shadow AI in the workplace.

Inteleca helps you improve your IT infrastructure with tailored hardware procurement solutions. Our experts provide a more controlled, secure, and scalable IT environment to mitigate security vulnerabilities.

Contact our team to learn more about our IT service solutions.