How AI Data Centers Can Optimize Infrastructure to Meet Future AI Demands

Artificial intelligence (AI) demand is driving tech giants like Google, Microsoft, and Meta to invest billions of dollars in AI data centers to meet computational demand and the infrastructure necessary to power AI-powered applications.

Today, almost 77% of devices use AI in some form, including virtual assistants, generative AI, and machine learning models. These technologies rely heavily on data centers, yet most lack the infrastructure to handle AI’s resource-intensive workloads.

To keep up, data center infrastructure must evolve—whether through hardware upgrades, network design optimization, or sustainable solutions—to handle larger data flows, reduce energy consumption, and address heat challenges

In this article, we’ll discuss how data center infrastructures work, the challenges they face, and how data centers can optimize their infrastructures to meet future demands of AI.

Key Takeaways

- Traditional data center infrastructures can’t handle AI’s resource-intensive workload, such as massive data flows, real-time processing, and heat generation.

- AI data centers must address high power consumption, water usage, and environmental impact by adopting sustainable solutions like liquid cooling systems and renewable energy.

- Inteleca experts help data centers update infrastructure.

The Core Infrastructure of AI Data Centers

AI data centers rely on the following core infrastructure to handle workload:

High-Performance Computing

AI models need high-performance computing nodes to handle big data and complex math calculations.

AI-specialized processors like graphic processing units (GPUs), tensor processing units (TPUs), and field-programmable gate arrays (FPGAs) are more likely to handle large tasks at once compared to general central processing units (CPUs).

These processors can train neural networks, analyze massive datasets, and run predictions (inference) faster while saving energy.

Liquid Cooling System for Transferring Heat

High-performance systems generate massive amounts of heat during tasks like training machine learning models. To keep things cool, AI data centers increasingly rely on liquid cooling systems, which are more effective than air cooling at transferring heat, especially for high-performance AI workloads.

According to Dr. Tim Shedd at Dell, liquid cooling can handle up to 1500 watts per socket, whereas air-cooled racks only manage around 10 kW per rack. This capability lets data centers run powerful, high-density servers without overheating or damaging hardware.

Some companies are taking it a step further with water cooling. Microsoft, for example, is testing underwater data centers. It believes natural cooling can cut costs by up to 95%.

While cooling addresses the immediate heat challenge, data centers face broader infrastructure challenges that require redesign and sustainable solutions.

Challenges in AI Data Center Design

Here are some of the challenges AI data centers are facing:

High Power Consumption and Water Wastage

AI data centers housing high-performance GPUs and CPUs need massive power to train generative AI or machine learning models. For example, a study found GPT-3 consumes 1.2 million kilowatt-hours (kWh) of electricity, which is equivalent to the lifetime emissions of five average cars.

For most data centers, using the same traditional infrastructure to manage high-intensive workloads means more heat emissions. As a result, they demand substantial amounts of water to maintain cooling temperatures. According to an NPR report, a mid-sized data center demands 300,000 gallons of water per day.

To address this, AI data centers need high-performance computing systems and carbon-neutral networks powered by renewable energy sources like solar panels to minimize their environmental impact and water usage.

Scalability Limitations

AI data centers that use both GPUs and CPUs to handle AI workloads need a scalable network design to interconnect and synchronize data across various compute nodes (servers or devices).

Unlike traditional systems, AI models have “elephant flows” where large amounts of data (in terabytes or petabytes) flow between clusters of GPUs to deploy tasks. This big data flow needs a highly adaptive infrastructure to manage future complex AI models.

As companies adopt AI-powered applications, data centers need hybrid systems, which can process multiple data types—such as text, images, and video—across edge computing and cloud servers.

This means moving beyond centralized setups to distributed architectures where computing, data processing, and storage are spread across multiple interconnected nodes to meet the growing demands of AI.

Latency & Connectivity Requirements

Real-time applications like voice assistants, video analytics, or autonomous drones rely on decisions made in milliseconds. AI data centers need ultra-high bandwidth for data transfer between GPUs (where processing happens) and storage (where data is stored) for future AI models.

For example, an autonomous vehicle is predicted to generate up to 40 TB of data per hour from sensors like radars and cameras. GPUs will need constant, ultra-fast access to data for decision-making, which is critical in high-stakes scenarios like driving.

Most data centers still rely on older network designs, like three-tier models, which aren’t built for fast communication between GPUs and storage. Upgrading to modern, high-speed connections is expensive and often requires AI-specific hardware solutions.

Decommissioning High-Performance Computing Systems

Another challenge for data centers involves decommissioning high-performance computing (HPC) systems. These systems include specialized hardware like GPUs and advanced cooling units, which require careful handling and proper disposal to avoid risks.

Data centers need skilled expertise to retire outdated equipment, relocate assets, or migrate systems in a way that is both environmentally responsible and compliant with industry regulations.

IT Asset Disposition (ITAD) providers like Inteleca can play a critical role in overcoming decommissioning challenges. We take a customer-centric approach to securely handling equipment, ensuring certified data destruction, facilitating e-waste recycling, and refurbishing hardware for resale value.

Innovations Driving the Future of AI Data Centers

Custom AI Hardware

AI-specific hardware, such as GPUs, TPUs (Tensor Processing Units), and FPGAs (Field-Programmable Gate Arrays), play a critical role in handling advanced AI workloads.

While GPUs remain essential for AI data centers, TPUs complement GPUs by accelerating specific machine learning tasks, such as matrix operations (mathematical computations involving arrays of numbers, often used in tasks like training and running deep learning models) for deep learning models.

Currently, data centers are adopting high-density computing environments with hardware that supports larger datasets and parallel processing capabilities. This will allow them to tackle the growing demands of generative AI and machine learning models.

Data centers are also adopting edge computing to process data closer to its source. The integration will allow faster, localized processing for applications like real-time video analytics, autonomous vehicles, and IoT (Internet of Things) devices. This approach can reduce latency and improve data transfer speeds for AI-powered applications.

Sustainable Infrastructure

AI data centers are turning to sustainable practices due to growing concerns about environmental impact. From AI infrastructure design to resource planning and hardware solutions, data centers are taking an eco-friendly approach to reduce waste, reuse heat, and achieve carbon neutrality.

For example, Stockholm Data Parks in Sweden plans on transferring excess heat generated from data centers to warm 10% of the city’s homes. This innovative approach shows a sustainable use case that many data centers can adopt in the future.

Another innovative prospect involves adopting nuclear power energy to power data centers. Tech giants like Microsoft and Google are investing in small modular reactors (SMRs) to balance sustainability with the growing computational demands of AI.

Practical Considerations for Enterprises

Here’s what enterprises can do to overcome challenges in AI data centers:

Hardware Upgrades

AI data centers need hardware that handles massive data flows, reduces energy use, and supports future AI demands. GPUs like NVIDIA H100, paired with high-bandwidth memory and advanced storage, allow data centers to process and store data simultaneously without delays.

The more advanced hardware you use, the faster data transfer and less heat emissions. For example, using one GPU for AI workload rather than two CPUs can save energy and process data faster.

Cost Implications

AI workloads require large energy demands and powerful hardware like GPUs and accelerators, which can be costly.

For example, building a 30 MW mid-scale data center can cost $300 million. To remain profitable, such a facility would need to generate $100 million annually just to cover operational costs like electricity and maintenance while delivering a 10% return to investors.

To manage these costs, enterprises must scale AI data center infrastructure by investing in flexible networks & design, high-density computing systems, advanced liquid cooling systems, and renewable energy solutions.

Additionally, exploring the secondary market for pre-owned or refurbished equipment can provide cost-effective alternatives while maintaining performance and reliability.

Work with Experts

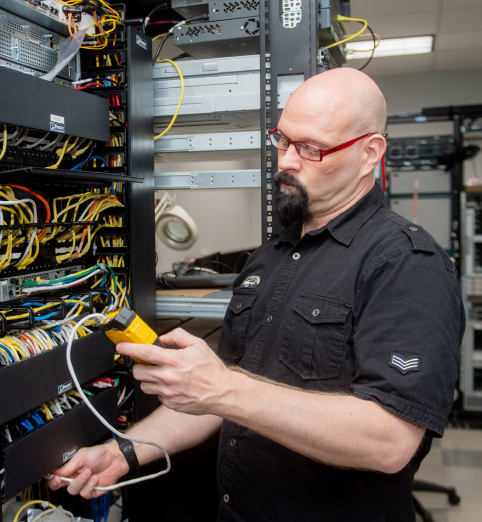

Managing an AI data center is no easy task. Industry experts like Inteleca can help optimize facility layout, inspect and install new hardware, and decommission old equipment while carefully handling sensitive data.

This ensures your sensitive data is protected and your center can adapt to the increasing demands of AI.

Scaling AI infrastructure? Schedule a free consultation with our IT experts today

Real-world Examples

Here are real-world examples of companies moving to AI data centers to run AI-powered applications:

- Google: Google has invested $33 billion in AI-related capital expenditures in 2024 to power its AI applications like Google Assistant, Gemini, and Google Search. Its data centers use TPUs for faster data processing and renewable energy projects to reduce carbon footprints.

- Microsoft: Microsoft plans on investing $80 billion to expand data centers, powering AI-driven tools like Azure OpenAI Service and Copilot. These centers rely on advanced GPUs and custom AI accelerators for efficient data handling.

- Amazon: Amazon plans to invest over $100 billion to grow AWS data centers. These facilities use custom Trainium and Inferentia chips for services like SageMaker and Alexa.

- Oracle: Oracle spent $8 billion on data centers to support OCI and AI tools like Oracle Digital Assistant. The centers use AMD EPYC processors and NVIDIA GPUs for high-performance computing.

Conclusion

As AI grows in demand, data centers will need to adopt sustainable infrastructure to store and process massive datasets and arithmetic calculations. But this expansion involves high costs and strategic planning.

The increasing AI-powered applications require high computational power and high bandwidth usage that current data centers fail to meet. That’s why assessing and optimizing your hardware, network, and design with expert guidance is crucial.

Data centers that align their infrastructure with future AI demands, through advanced cooling systems, scalable hardware, and energy-saving designs, will thrive. Those who fail to improvise their infrastructure are likely to face financial losses and exhaust hardware in the long run.

Contact us to explore how you can future-proof your data centers and meet the challenges of an AI-driven world.

FAQs

How much energy do AI data centers use?

Data centers used 460 terawatt-hours (TWh) in 2022, and this figure is expected to reach 1,000 TWh by 2026. A data center’s energy consumption depends on computational demands and infrastructure. As of 2025, data centers account for around 1-2% of global electricity consumption.

What are AI data centers?

AI data centers are large facilities with thousands of high-performance systems like GPUs and TPUs to process massive datasets and handle complex AI workloads, such as training machine learning models or running neural networks.

Does AI need data centers?

Yes, AI applications need data centers to store, manage, and process large datasets and calculate complex math problems at the backend. These facilities contain thousands of physical servers to learn, train, and run decisions for AI models.