It seems like every article I write these days sounds like it is coming from an old man, sitting on a front porch, waving his fist in the air at passers-by, and every sentence starting with, “In my day…” But, you know what? I’m okay with that. If having more than 15 years of experience in IT—and seeing its evolution during one of the most fascinating times in history—makes me a crotchety old man, then bring on the rocking chair folks, it’s about to get real.

Now, in my day (yup—we’re starting with the old man tone right out of the gate on this one), we used to have this term called “disaster recovery.” It was a reactionary term that literally meant preparing for a disaster, witnessing it happen in real time, watching in sheer panic as everything went to hell in a handbasket, then working vigorously to re-establish everything to semi-working order—just to keep the business running on one proverbial cylinder. And, by gum, we liked it that way. (As I get older, yes, I have begun sounding like a 1800s gold prospector.)

So, what’s so different now? First of all, there is really no such thing as disaster recovery anymore. Gone are the days of purpose-built off-site recovery offices packed with computers and desks awaiting the day the troops invade before enacting the business’s recovery plan. Now, we simply have “business continuity,” a concept that entails having a dispersed virtual infrastructure that can take over in the event a building gets washed away in a tsunami. With business continuity, the data and all its applications will simply (well, perhaps not so simply, but at least far more do-ably) be reinstated and accessible through any device at a new location, be it another office, home, or coffee shop.

Yes, life certainly has changed—or has it? Sure, the technology is far more robust and, as with all things virtual and cloud-related, the data itself can be saved from Mother Nature’s wrath. But that doesn’t mean those young whippersnappers out there (you’re picturing me in overalls and a floppy cowboy hat, aren’t you?) shouldn’t worry about making sure they have the right tools for the job and preparing for the worst.

So, why prepare if technology is making things so easy? Because if you don’t have the tools, or don’t practice using them, the following can happen. Here are just a few statistics to put things into perspective:

Chances are the average company will experience a business continuity / disaster recovery event at least once during its lifespan—perhaps even a few times. And for the scary numbers portion of this tale of woe, the average outage lasts approximately 18.5 hours. In that short amount of time it can cost a small company $8,000 or more to recover, while the tab for a large enterprise can come in at close to a million dollars.

But, that’s not the worst of it. The big problem centers around false expectations. One of the major findings in a recent study done by Veeam is that four out of five organizations have an “availability gap,” meaning that 82% of survey respondents recognized the inadequacy of their recovery capabilities when compared with the expectations of the business unit itself. Furthermore, 72% said they are unable to protect their data frequently enough to ensure access to the data. Is this why we all have trust issues?

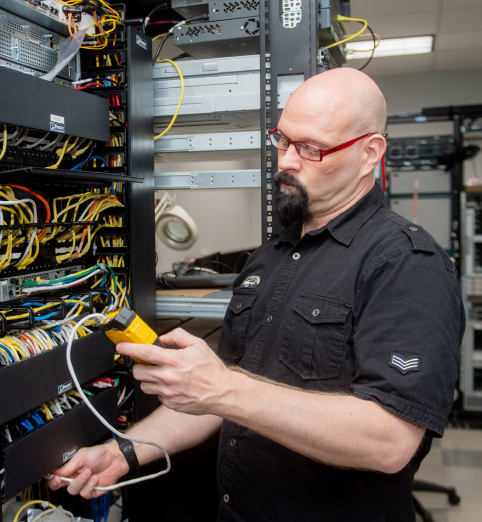

So, how does one go about truly preparing for an event? First of all, build an infrastructure with the right tools and protocols to ensure that data and applications are in check and ready for the worst-case scenario. Then… practice, practice, practice.

Setting up drills that mimic the very worst Mother Nature can offer and practicing those scenarios frequently is what makes the difference between succeeding—or not. For starters, practice a theoretical failover scenario for an application, and make it happen on a Friday afternoon—the worst of all days, because people disappear on weekends. And, though it may be an unsuccessful drill, learn from your mistakes and keep at it. Eventually, application owners will start learning more about their application and how to do a successful failover.

And, once the failovers start working all the time for an application, be like the Beastie Boys and start sabotaging it. Old domain controllers are a good way to confound people (yes, I used the word confound like it’s 1867).

Lastly, find a partner that lives and breathes this stuff. Bring in the experts with the right tools, the in-depth knowledge, and a history of building recoverable environments. You know, the ones that show up with their very own portable front porch and rocking chair. Now, gather ’round kids and let me tell you tales of the olden days…